Table of Contents

Introduction: Docker for DevOps

In the modern software development landscape, Docker has become a game-changer, especially in the context of DevOps. As businesses strive to achieve faster development cycles and more reliable deployments, Docker’s containerization technology offers a streamlined solution for building, shipping, and running applications across diverse environments. Its ability to package applications into containers has fundamentally changed how teams approach both development and operations.

For DevOps teams, Docker provides a common framework that unifies the development and deployment processes, eliminating inconsistencies between development, testing, and production environments. With Docker, developers can create lightweight, portable containers that run consistently on any infrastructure—whether it’s on-premises or in the cloud. This ensures that code behaves the same way no matter where it is deployed, reducing “it works on my machine” issues.

In this guide, you’ll be taken through a comprehensive journey, from the basics of Docker to how it integrates seamlessly into the DevOps pipeline. By the end, you will have a clear understanding of how Docker facilitates faster deployments, better scalability, and more efficient automation in DevOps environments. Whether you’re new to Docker or looking to solidify your foundation, this guide will equip you with the knowledge to confidently implement Docker for DevOps practices in your organization.

Let’s dive into the details of how Docker is revolutionizing the deployment process, enhancing automation, and supporting continuous integration and delivery in DevOps workflows.

What is Docker and Why It’s Important for DevOps

Docker is a powerful, open-source containerization platform that enables developers and IT teams to build, package, and run applications inside isolated environments called containers. Unlike virtual machines, Docker containers share the host system’s OS, which makes them lightweight, faster to start, and easier to manage. By encapsulating all the dependencies and configurations required to run an application, Docker ensures that the application will work consistently across different environments.

Containerization is the core concept behind Docker, and it’s particularly transformative in the DevOps ecosystem. It allows developers to create “write once, run anywhere” applications, ensuring portability across platforms and minimizing environment-specific issues. This simplifies collaboration between development and operations teams by standardizing the runtime environment, reducing errors caused by configuration mismatches, and accelerating the deployment process.

Why Docker is Essential for DevOps:

- Simplified Development, Testing, and Deployment: Docker allows developers to work in consistent environments, ensuring that what works in development will also work in production. Containers can be built, tested, and deployed independently, making continuous integration and continuous delivery (CI/CD) pipelines smoother and more efficient.

- Improved Resource Utilization: Since Docker containers share the host OS kernel, they use less memory and computing resources than traditional virtual machines, enabling more containers to run on the same hardware. This leads to better server efficiency and cost savings.

- Portability and Consistency: Docker ensures that the application, along with its dependencies, runs consistently regardless of the underlying platform. Whether you’re deploying on cloud infrastructure, on-premises servers, or hybrid environments, Docker containers behave the same.

- Isolation and Security: Each Docker container is isolated, allowing applications with different dependencies or conflicting software versions to run on the same machine without interference. This modularity improves both security and scalability, as each application component can be deployed, updated, or scaled independently.

- Faster Time-to-Market: The lightweight nature of Docker containers speeds up development, testing, and deployment cycles. This allows teams to release new features or patches more frequently, increasing agility and responsiveness to market demands.

In summary, Docker’s role in DevOps goes beyond just containerization. It bridges the gap between development and operations teams by simplifying workflows, enhancing application portability, and ensuring consistency across the entire software development lifecycle. The combination of its lightweight nature, flexibility, and scalability makes Docker indispensable for any modern DevOps strategy.

Core Concepts of Docker

Docker Images

Docker Images are at the heart of containerization technology, providing the foundation for Docker containers. A Docker image is essentially a blueprint that contains everything required to run an application, including code, libraries, dependencies, environment variables, and system tools. These images ensure that applications are portable, lightweight, and consistent across various environments, simplifying both development and deployment processes.

What is a Docker Image?

A Docker image is a standalone, executable package that includes all the components necessary to run a specific application. It acts as a snapshot of the software environment, encapsulating the code, dependencies, runtime, and other system tools. Docker images are designed to be reusable and consistent, allowing developers to create environments that can be shared and deployed across different platforms without any discrepancies.

Key Components of Docker Images

Docker images consist of multiple layers, which stack on top of one another to create the final executable package. Each layer represents a change or addition to the image, such as new software dependencies or configuration updates. This layered architecture offers several advantages, including caching, reduced image size, and faster builds.

Here’s a breakdown of the components:

- Base Image: The foundational layer, which can be a minimal operating system or any other pre-built image.

- Parent Image: If a Docker image is built from an existing one, the parent image serves as the initial layer.

- Layers: Each command in the Dockerfile creates a new layer, storing configurations and system dependencies required for the container to function.

- Container Layer: Once a Docker container is created from an image, it adds a writable layer where modifications can be stored.

- Manifest: A JSON file describing the image’s contents, including metadata like tags and digital signatures.

How Docker Images Work

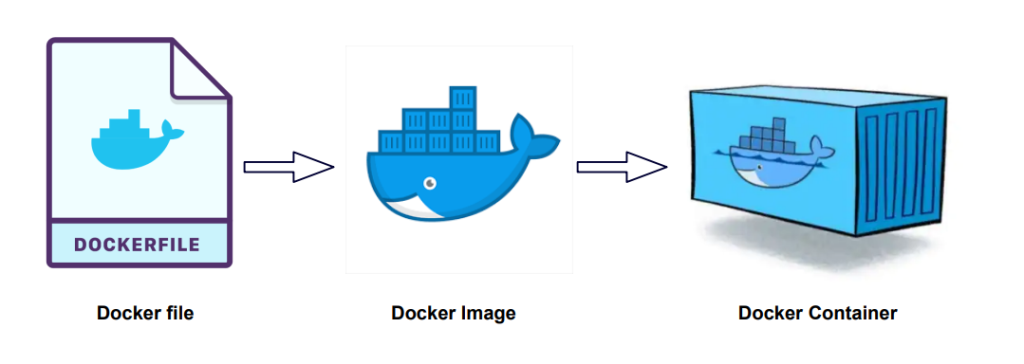

Docker images are created using a Dockerfile, which is a text document containing instructions for assembling the image. Each instruction in the Dockerfile generates a new image layer, and these layers are cached to speed up subsequent builds.

The key steps in the lifecycle of a Docker image include:

- Building: Developers use a Dockerfile to specify the image’s components. This includes the base image, software dependencies, and environment configurations.

- Pushing/Pulling: Images can be uploaded to a container registry like Docker Hub for easy sharing. Other developers can pull these images from the registry to use locally or in production environments.

- Running: Once an image is created, it can be used to launch Docker containers. The container runs in its isolated environment, allowing the application to work as intended, regardless of the underlying infrastructure.

Docker Image Commands

Here are some essential commands to interact with Docker images:

- docker image build: Creates an image from a Dockerfile.

- docker image pull: Downloads an image from a registry.

- docker image push: Uploads an image to a registry.

- docker image rm: Removes an image from your local system.

- docker image inspect: Shows details about a specific image.

- docker image history: Displays the layers and changes made to an image over time.

Benefits of Docker Images

- Portability: Docker images ensure that an application will run the same way across different environments—local, staging, or production—eliminating compatibility issues.

- Consistency: Developers can create standardized environments for all stages of the software lifecycle, minimizing “works on my machine” problems.

- Efficiency: The layered architecture enables reusing image layers across multiple builds, improving build times and reducing storage usage.

- Versioning and Collaboration: Docker images can be versioned, ensuring that teams can roll back to previous versions if needed. Sharing images across teams simplifies collaboration.

- Lightweight: Docker containers are much lighter than traditional virtual machines because they share the host OS kernel.

By understanding Docker images and how they work, developers can streamline the software development process, ensuring applications are easy to build, test, and deploy.

Docker Containers

A Docker container is a portable and efficient software development platform that allows applications to be packaged into isolated environments. This encapsulation enables seamless execution across any system running a Linux or Windows operating system. Docker’s innovative containerization technology simplifies application deployment and management, making it a critical tool in modern software development.

Understanding Docker Containers

At its core, a Docker container is a standardized unit of software that includes everything needed to run an application: code, libraries, system tools, and settings. Unlike traditional virtual machines (VMs) that require a full operating system, Docker containers share the host OS kernel, which significantly reduces overhead and increases efficiency. This lightweight approach allows for faster application startup times and more efficient resource utilization.

Think of Docker containers as another form of virtualization. While VMs allow multiple operating systems to run on a single physical machine, containers virtualize the operating system itself, splitting it into isolated compartments that can run multiple applications simultaneously. Each container is self-sufficient, providing a consistent runtime environment regardless of the underlying infrastructure.

How Docker Containers Work

The Docker daemon is responsible for building, running, and managing containers. It takes commands from the Docker client, a command-line interface that allows developers to create and control containers. When a developer inputs a command, the daemon assembles the necessary components and executes the instructions, resulting in a fully functional container that can run applications.

Docker’s architecture allows developers to break down applications into smaller, easily transportable components, facilitating a more modular approach to software development. This modularity enhances the portability of applications, enabling them to run anywhere Docker is installed, whether on a developer’s laptop, a testing environment, or in the cloud.

Docker’s Evolution

Docker was introduced in March 2013, created by Solomon Hykes and released as open source software. Since then, it has garnered significant attention and investment, including $40 million in venture capital funding in 2014. The Docker platform consists of the Docker Engine, a powerful runtime and packaging tool, and Docker Hub, a cloud-based service for sharing applications.

The lightweight nature of containers makes them particularly appealing for cloud applications and development environments. With minimal software overhead required to run an application, Docker enables faster development cycles and streamlined testing processes. Multiple development teams can work concurrently on different components of an application, while containers serve as isolated sandboxes for testing, reducing the risk of affecting the entire system.

Portability and Scalability

One of the key advantages of Docker containers is their portability. Once an application is packaged into a container, it can be deployed consistently across various environments without concern for compatibility issues. This portability extends to scalability as well; containers can be rapidly scaled up or down based on demand, making them ideal for dynamic workloads.

However, while container technology offers significant benefits, it also presents challenges. The reliance on Linux and Windows environments can limit its applicability in other operating systems. Additionally, as more teams collaborate on containerized applications, the architecture can become complex, necessitating a higher level of expertise to manage effectively. Security also becomes a concern, as containers introduce new attack surfaces and potential vulnerabilities that must be monitored and controlled.

In summary, Docker containers provide a robust and efficient framework for modern software development, allowing applications to be built, tested, and deployed with unparalleled speed and flexibility. Understanding Docker containers is essential for developers looking to leverage the full potential of containerization technology.

Dockerfile

A Dockerfile is a crucial component in the Docker ecosystem, serving as a script that contains a series of instructions for building Docker images. It acts as a blueprint for your application, defining everything required to create an environment in which your software can run. As organizations increasingly adopt Docker for its high scalability, availability, and security, understanding how to create and utilize Dockerfiles becomes essential for developers and operations teams.

What is a Dockerfile?

At its core, a Dockerfile is a plain text file that consists of various commands and parameters used to automate the creation of Docker images. These images are read-only templates that encapsulate all necessary components, including application code, libraries, dependencies, and the operating system. When executed, the instructions in a Dockerfile build an image that can then be used to launch one or more Docker containers.

Dockerfile Syntax

The syntax of a Dockerfile is straightforward, with each instruction typically placed on a new line. Comments can be included using the # symbol. Here’s a basic example:

# Print a welcome message

RUN echo "Welcome to Docker"Key Dockerfile Commands

Dockerfiles utilize specific commands to dictate the build process. Here are some of the most commonly used instructions:

- FROM: This command sets the base image for subsequent instructions. Every Dockerfile must begin with a

FROMinstruction.

FROM ubuntu:18.04- COPY: This command is used to copy files from your local file system into the image.

COPY ./myapp /app- RUN: This instruction executes commands in the shell to install packages or configure the environment within the image.

RUN apt-get update && apt-get install -y python3- CMD: This command specifies the default command to run when a container is launched from the image. It can be overridden by providing a command when running the container.

CMD ["python3", "/app/myapp.py"]- ENTRYPOINT: This instruction sets the command that will always run when the container starts, and can include arguments.

ENTRYPOINT ["python3"]Building a Docker Image from a Dockerfile

Creating a Docker image from a Dockerfile involves a few steps:

- Create a Directory: Start by creating a directory to store your Dockerfile and related files.

mkdir mydockerapp

cd mydockerapp- Create a Dockerfile: Use a text editor to create a new file named

Dockerfileand add your instructions.

FROM ubuntu:18.04

RUN apt-get update && apt-get install -y python3

COPY ./myapp /app

CMD ["python3", "/app/myapp.py"]- Build the Image: Run the following command to build the image, replacing

.with the path to your Dockerfile:

docker build -t mydockerapp .- Verify the Image: Check if your image has been created successfully with:

docker images- Run a Container: Create a container from your newly built image:

docker run --name myappcontainer mydockerappConclusion

In summary, a Dockerfile is a powerful tool for defining the environment and instructions needed to build Docker images. Its straightforward syntax and command structure allow developers to create consistent, portable, and efficient application environments. As Docker continues to gain traction in various industries, mastering Dockerfiles will undoubtedly enhance your software deployment and management capabilities. By following best practices and experimenting with different commands, you can optimize your Dockerfiles for better performance and scalability, ensuring your applications run smoothly across various environments.

Docker Compose

Docker Compose is a powerful tool designed to simplify the management of multi-container applications. In many projects, you may find yourself needing to run several interconnected services, such as databases, application servers, and message brokers. Manually starting and managing each service in its own container can become cumbersome and error-prone. This is where Docker Compose comes in, allowing you to define and run multiple containers as a single application.

Why Use Docker Compose?

When working on complex applications, especially those involving microservices, it can be tedious to manage each service individually. For example, a project may require services like Node.js, MongoDB, RabbitMQ, and Redis. Docker Compose allows you to define all these services in a single configuration file, streamlining the process of starting, stopping, and managing your application stack.

What is Docker Compose?

Docker Compose uses a YAML file to define the services, networks, and volumes needed for your application. This file specifies how each container should be built, what images to use, and any environment variables that should be set. By using a single command, you can bring up or down all the services defined in your Compose file.

Creating a Docker Compose File

A Docker Compose file typically named docker-compose.yml contains configuration settings for each service. Below is an example configuration for a Node.js application that uses MongoDB:

version: '3'

services:

app:

image: node:latest

container_name: app_main

restart: always

command: sh -c "yarn install && yarn start"

ports:

- 8000:8000

working_dir: /app

volumes:

- ./:/app

environment:

MYSQL_HOST: localhost

MYSQL_USER: root

MYSQL_PASSWORD:

MYSQL_DB: test

mongo:

image: mongo

container_name: app_mongo

restart: always

ports:

- 27017:27017

volumes:

- ~/mongo:/data/dbBreakdown of the Docker Compose File

- version: Specifies the version of Docker Compose being used.

- services: Defines the services that make up your application.

- app: Represents the Node.js application service.

- image: The Docker image to use for the container.

- container_name: A custom name for the container.

- restart: Policy for restarting the container if it fails.

- command: The command to run inside the container.

- ports: Maps the host port to the container port.

- working_dir: The working directory inside the container.

- volumes: Mounts the current directory to the

/appdirectory in the container. - environment: Sets environment variables for the container.

- mongo: Represents the MongoDB service.

- Similar to the app service, it defines the MongoDB image and port mappings.

Running Your Multi-Container Application

After creating your docker-compose.yml file, you can build and run your application using the following commands:

- Build the Containers:

docker-compose build- Run the Containers:

docker-compose upTo run the containers in detached mode (in the background), use:

docker-compose up -d- Check the Status of Running Containers:

docker-compose psConclusion

Docker Compose significantly simplifies the management of multi-container applications. By defining your entire application stack in a single YAML file, you can quickly spin up and manage your services with minimal effort. This approach not only enhances development speed but also ensures consistency across different environments. Whether you’re working on a small project or a large-scale application, Docker Compose is an invaluable tool for streamlining your workflow.

By leveraging Docker Compose, you can focus on developing your application rather than managing the infrastructure, allowing for faster iterations and smoother deployments.

Setting Up Docker for DevOps

In this section, we’ll walk through the process of installing and configuring Docker on your local machine, providing you with the foundation needed to start utilizing Docker in your DevOps practices. Follow these step-by-step instructions to ensure a smooth installation.

Step 1: Check System Requirements

Before diving into the installation, make sure your system meets the following requirements:

- Operating System: Windows 10 (64-bit) or Windows 11 (64-bit).

- Processor: 64-bit processor with Second Level Address Translation (SLAT) capability.

- RAM: Minimum of 4 GB.

- Virtualization: Hardware virtualization support enabled in BIOS.

- Windows Features: Hyper-V, WSL 2 (Windows Subsystem for Linux), and Containers features must be enabled.

Step 2: Download Docker Desktop

- Visit the official Docker website to download Docker Desktop for Windows.

- Click on the download link for Docker Desktop and save the installer (

Docker Desktop Installer.exe) to your system.

Step 3: Install Docker Desktop

- Locate the downloaded

Docker Desktop Installer.exefile and double-click it to run the installer. - During the installation, make sure to enable the Hyper-V and WSL 2 features when prompted.

- Follow the installation prompts, and once completed, click Close.

Step 4: Start Docker Desktop

- After installation, Docker Desktop does not start automatically. To launch it, search for “Docker Desktop” in your Start menu and select it.

- Docker may prompt you to go through an onboarding tutorial. This tutorial will guide you through creating a Docker image and running your first container.

Step 5: Verify Docker Installation

To confirm that Docker has been successfully installed and is running:

- Open the Command Prompt or PowerShell.

- Run the following command to check the Docker version:

docker --version- You can also run a test container with the following command:

docker run hello-worldThis command will pull a test image and run a container to verify that Docker is working correctly.

Step 6: Configure Docker Settings (Optional)

- Docker User Group: If your user account is different from the admin account, you may need to add your user to the Docker user group. Run the following command in PowerShell:

net localgroup docker-users <your-username> /add- Restart your computer to ensure all settings take effect.

Step 7: Explore Docker Desktop

Once Docker Desktop is running, you can start exploring its features. The Docker Desktop interface provides an integrated user experience to view and manage your Docker containers and images effectively.

Conclusion

You have now successfully installed and configured Docker on your local machine! With Docker set up, you are ready to dive deeper into containerization and integrate Docker into your DevOps workflow.

If you have any questions or run into issues during installation, feel free to leave your comments below. Happy containerizing!

Docker in DevOps: CI/CD Pipeline Integration

In the realm of DevOps, the integration of Docker into the Continuous Integration and Continuous Deployment (CI/CD) pipeline has revolutionized how software development teams manage their workflows. This section explores how Docker enhances the CI/CD process, emphasizing the importance of consistent environments and streamlined deployments.

Introduction to CI/CD

Continuous Integration (CI) and Continuous Deployment (CD) are practices that enable development teams to automate and streamline their software delivery processes. CI focuses on the frequent integration of code changes into a shared repository, where automated builds and tests are run. This ensures that any issues are detected early, enhancing code quality and reducing integration problems.

CD extends CI by automating the deployment of code to production environments, ensuring that new features and fixes can be delivered to users swiftly and reliably. Together, these practices improve collaboration, reduce manual errors, and enhance overall productivity.

Why Use Docker in Your CI/CD Pipeline

Incorporating Docker into the CI/CD pipeline offers numerous advantages:

- Reproducible Builds: Docker containers encapsulate the application and its dependencies, ensuring that the environment remains consistent across development, testing, and production stages. This eliminates the “it works on my machine” problem, as containers behave the same regardless of where they are deployed.

- Faster Deployments: Docker images can be quickly built, tested, and deployed. The lightweight nature of containers allows for rapid scaling and deployment, significantly speeding up the release cycle.

- Scalability: Docker makes it easy to scale applications horizontally. As demand increases, more containers can be spun up to handle the load without disrupting existing services.

- Isolation: Each container runs in its isolated environment, preventing conflicts between applications and their dependencies. This isolation enhances security and stability, as issues in one container do not affect others.

Setting Up Your Docker Environment

To effectively integrate Docker into your CI/CD pipeline, you must first set up your Docker environment:

- Install Docker: Begin by installing Docker on your local machine and CI/CD platform (e.g., Jenkins, GitHub Actions, GitLab CI).

- Verify Installation: Check that Docker is installed correctly by running

docker --versionin your terminal. - Configure CI/CD Platform: Ensure your CI/CD platform is set up to use Docker, which may involve installing Docker on build agents or configuring Docker services.

Building a Custom Docker Image for Your Application

Creating a custom Docker image is crucial for deploying your application through the CI/CD pipeline:

- Create a Dockerfile: This file outlines how your application should be built. Specify the base image, set the working directory, copy application files, install dependencies, expose necessary ports, and define the command to run the application. Here’s an example Dockerfile for a Node.js application:

FROM node:14

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 8080

CMD ["npm", "start"]- Build the Image: Use the command

docker build -t your-image-name .to create your Docker image. - Push to Docker Hub: After building, push the image to a Docker registry like Docker Hub using

docker push your-image-name.

Integrating Docker into Your CI/CD Pipeline

Once your Docker image is ready, it’s time to integrate it into your CI/CD pipeline:

- Update Pipeline Configuration: Modify your CI/CD configuration to include steps for building, testing, and deploying your Docker containers.

- Testing: Ensure that your application’s tests run within Docker containers, maintaining consistency and reliability.

- Deployment: Configure your pipeline to deploy Docker containers to your target environments (staging, production) using Docker Compose or similar tools. Here’s a sample GitHub Actions configuration for a CI/CD pipeline:

name: CI/CD Pipeline

on: [push]

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v1

- name: Login to Docker Hub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKER_HUB_USERNAME }}

password: ${{ secrets.DOCKER_HUB_ACCESS_TOKEN }}

- name: Build and push Docker image

uses: docker/build-push-action@v2

with:

context: .

push: true

tags: your-image-name:latest

deploy:

runs-on: ubuntu-latest

needs: build

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Deploy to production server

run: |

ssh your-server "docker pull your-image-name:latest && docker-compose up -d"Scaling Your CI/CD Pipeline with Docker

As your application grows, Docker can help scale your CI/CD pipeline effectively:

- Parallelize Pipeline Stages: Run multiple stages concurrently using Docker containers to reduce build and deployment times.

- Optimize Resource Management: Use Docker’s resource allocation features to ensure efficient utilization during pipeline execution.

- Automate Scaling: Implement strategies to automatically scale your application based on demand, adding or removing containers as needed.

Monitoring Your CI/CD Pipeline

To ensure your CI/CD pipeline operates smoothly, monitoring is essential:

- Log Analysis: Collect and analyze logs from pipeline stages to identify issues.

- Monitoring Tools: Utilize tools like Prometheus or Grafana for real-time monitoring and visualization of metrics.

- Health Checks: Configure health checks for your application services to detect issues early.

- Performance Reviews: Regularly review performance metrics to identify bottlenecks and areas for improvement.

Conclusion

Integrating Docker into your CI/CD pipeline enhances the efficiency and reliability of your software development process. By providing consistency across different environments and enabling faster deployments, Docker facilitates a smoother DevOps workflow. With the right setup, your team can leverage Docker’s power to deliver high-quality applications more quickly, ensuring that your software meets user demands effectively.

Automating Deployments with Docker

In today’s fast-paced software development environment, the need for speed and efficiency has become paramount. Docker, a leading containerization platform, has transformed how developers build, deploy, and manage applications. By leveraging Docker’s capabilities, teams can automate their deployment processes, ensuring consistency, scalability, and reliability throughout the development lifecycle. In this section, we will explore how Docker facilitates automated deployments and provide an example of using Docker within a deployment automation pipeline with tools like Jenkins or GitLab CI.

Understanding Docker’s Role in Deployment Automation

Docker enables developers to package their applications and all their dependencies into lightweight, portable containers. These containers can run consistently across various environments, from local development machines to production servers. This uniformity eliminates the “it works on my machine” problem and significantly streamlines the deployment process.

By utilizing Docker, developers can automate the deployment pipeline. This is achieved by creating a Dockerfile, which contains instructions for building a Docker image. This image encapsulates the application, its runtime, libraries, and any other dependencies required to run the software. Once a Docker image is built, deploying it can be done with a single command, making the process efficient and straightforward.

Benefits of Automating Deployments with Docker

- Consistency: Docker ensures that applications run in the same environment across development, testing, and production. This consistency reduces the risk of deployment failures due to environmental differences.

- Speed: Automating the deployment process with Docker significantly accelerates delivery. Teams can deploy updates faster, improving overall productivity.

- Scalability: Docker allows for easy scaling of applications. Developers can spin up multiple instances of a container to handle increased demand without manual intervention.

- Reduced Risk of Errors: Automation minimizes human error during deployments. With a well-defined pipeline, developers can focus on writing code rather than worrying about deployment inconsistencies.

Example: Automating Deployments with Jenkins

To illustrate how Docker can be integrated into an automated deployment pipeline, let’s consider an example using Jenkins. Jenkins is a popular open-source automation server that facilitates CI/CD processes.

Step 1: Setting Up Jenkins with Docker

- Install Jenkins: You can set up Jenkins in a Docker container using the following command:

docker run -d -p 8080:8080 -p 50000:50000 jenkins/jenkins:lts- Access Jenkins: Navigate to

http://localhost:8080to access the Jenkins interface. - Install Required Plugins: In Jenkins, go to

Manage Jenkins>Manage Plugins, and install the Docker plugin and any other necessary plugins for CI/CD.

Step 2: Creating a Jenkins Pipeline for Docker Deployment

- Create a New Pipeline: In Jenkins, create a new item and select “Pipeline.”

- Configure the Pipeline: Add the following code to the pipeline script:

pipeline {

agent any

stages {

stage('Build') {

steps {

script {

// Build the Docker image

sh 'docker build -t your-image-name .'

}

}

}

stage('Test') {

steps {

script {

// Run tests inside a Docker container

sh 'docker run --rm your-image-name npm test'

}

}

}

stage('Deploy') {

steps {

script {

// Deploy the Docker container

sh 'docker run -d -p 80:80 your-image-name'

}

}

}

}

}- Run the Pipeline: Trigger the pipeline manually or set it to run on every push to your repository. Jenkins will build the Docker image, run tests, and deploy the application automatically.

Example: Automating Deployments with GitLab CI

GitLab CI is another powerful tool for implementing CI/CD pipelines, and it can easily integrate with Docker. Here’s how to set it up:

- Create a

.gitlab-ci.ymlFile: In your project repository, create a file named.gitlab-ci.ymlwith the following content:

stages:

- build

- test

- deploy

build:

stage: build

script:

- docker build -t your-image-name .

test:

stage: test

script:

- docker run --rm your-image-name npm test

deploy:

stage: deploy

script:

- docker run -d -p 80:80 your-image-name- Push to GitLab: When you push changes to your GitLab repository, the pipeline will trigger automatically. It will build the Docker image, run tests, and deploy the application in sequence.

Conclusion

Automating deployments with Docker revolutionizes the development process by providing a consistent, efficient, and scalable approach to application deployment. By integrating Docker into CI/CD tools like Jenkins or GitLab CI, development teams can create robust deployment pipelines that minimize errors and accelerate delivery times. As organizations continue to embrace DevOps practices, automating deployment processes with Docker will remain a critical component of successful software development. By leveraging the power of Docker and automation tools, teams can enhance their workflows and focus on delivering high-quality software.

Best Practices for Docker in DevOps

1. Version Control for Docker Images

Implementing version control for your Docker images is crucial for maintaining a reliable and manageable containerized environment. Here are some key practices to ensure effective version control:

- Tagging Images: Use meaningful tags to identify different versions of your Docker images. A common approach is to use semantic versioning (e.g.,

1.0.0,1.0.1) alongside descriptive tags (e.g.,latest,stable). This allows for clear identification of changes and facilitates rollbacks if necessary. - Immutable Images: Treat your Docker images as immutable artifacts. Once an image is built and tagged, it should not be modified. Instead, create a new image for any changes. This practice ensures consistency across deployments and simplifies debugging by preserving the state of previous versions.

- Use a Private Registry: For better security and access control, consider using a private Docker registry for storing your images. This allows you to manage who can access, update, and deploy your images while also providing an audit trail of changes.

- Automate Image Builds: Integrate your Docker image builds into your CI/CD pipeline. Automation ensures that images are built consistently and tested thoroughly before deployment. Use tools like GitLab CI, Jenkins, or GitHub Actions to automate this process.

2. Security Considerations

Security is paramount in any DevOps strategy, and Docker introduces unique considerations. Here are some best practices for securing your Docker images and containers:

- Start with Minimal Base Images: Begin with minimal base images to reduce the attack surface. Lightweight images like Alpine or

scratchare excellent choices. Avoid bloated images that include unnecessary packages, as they increase potential vulnerabilities. - Regularly Update Base Images: Keep your base images up to date with the latest security patches. Regularly monitor for updates and rebuild your images to incorporate these changes, ensuring you’re protected against known vulnerabilities.

- Scan for Vulnerabilities: Utilize tools like Trivy, Clair, or Snyk to regularly scan your Docker images for vulnerabilities. Incorporate these scans into your CI/CD pipeline to catch issues early in the development process.

- Implement the Principle of Least Privilege: Configure your containers to run with the minimum permissions necessary. Avoid running containers as the root user, as this increases security risks. Use Docker’s user namespace feature to limit permissions.

- Secure Secrets Management: Avoid hardcoding sensitive information, such as API keys or database passwords, directly in your Docker images. Use secrets management tools provided by Docker Swarm or Kubernetes to manage sensitive data securely.

3. Efficient Container Management

Efficient container management is essential for maximizing the benefits of Docker in your DevOps workflow. Here are several best practices to enhance your container management strategy:

- Utilize Multi-Stage Builds: Leverage multi-stage builds to create smaller and more efficient Docker images. This approach allows you to separate the build and runtime environments, ensuring that only necessary files are included in the final image.

- Optimize Layering: Optimize the layering of your Docker images to reduce size and improve build times. Combine related commands into single RUN instructions and strategically order your instructions to maximize cache utilization.

- Monitor Resource Usage: Implement monitoring tools to track the resource consumption of your containers. Use tools like Prometheus, Grafana, or Docker’s built-in monitoring features to gain insights into CPU, memory, and disk usage.

- Automate Container Lifecycle Management: Use orchestration tools like Kubernetes or Docker Swarm to manage the lifecycle of your containers. Automate deployment, scaling, and monitoring to ensure your applications run smoothly and efficiently.

- Regular Cleanup: Implement regular cleanup procedures to remove unused images, stopped containers, and dangling volumes. This practice helps free up resources and maintain a tidy Docker environment.

Conclusion

Mastering Docker is essential for achieving a seamless DevOps experience. By harnessing the power of containerization, teams can streamline development, enhance collaboration, and ensure consistency across various environments. Docker simplifies the deployment process, allowing for faster iteration cycles and more reliable software delivery. With practices like efficient image management, robust security measures, and effective container orchestration, organizations can optimize their workflows and maximize resource utilization.

As the demand for agile development continues to grow, the ability to leverage Docker becomes increasingly valuable. Embrace the journey of mastering Docker by exploring its features, best practices, and integration within your DevOps pipeline.