Table of Contents

Introduction

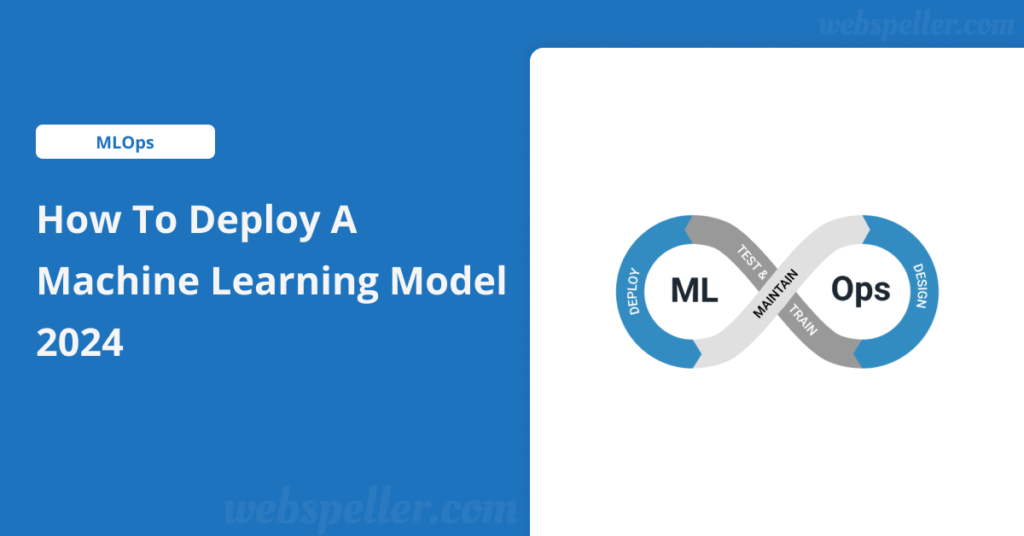

Deploying a machine learning model is one of the most crucial steps in transforming a successful prototype into a real-world application. After all the hard work of building and training the model, you need to know how to bring it to production effectively. In this guide, we’ll walk you through each phase of deploying a machine learning model, making sure to cover all essential aspects.

What is a Machine Learning Model?

Before diving into the deployment process, it’s essential to understand what a machine learning model is. A machine learning model is an algorithm trained on historical data to make predictions or decisions without explicit programming. But the real value comes when you deploy it in a production environment, where it can generate insights and predictions in real time.

Steps to Deploy a Machine Learning Model

- Model Development and Training The first step in deploying a machine learning model is developing and training it on existing data. This involves a series of iterative processes where data scientists apply various algorithms, train machine learning models, and refine them through optimization techniques like hyperparameter tuning.During this stage, engineers focus on feature engineering, data preprocessing, and building a robust, scalable model. This step is critical as it lays the foundation for successful machine learning model deployment.

- Model Validation and Testing Once the model has been trained, it’s crucial to validate and test it. Validation ensures that the model generalizes well to unseen data, minimizing overfitting and ensuring high accuracy in real-world scenarios.During this stage, teams test different versions of the model and select the one that performs best. Validation also helps assess how the model might function in a production environment, a key aspect of deploying machine learning models in production.

- Model Containerization To ensure that the trained model can run in different environments, containerization is often used. Tools like Docker package the model along with its dependencies, enabling smooth ml deployment across development, testing, and production environments. This step helps to standardize the deployment process and avoid compatibility issues.

- Integration with Production Environment Now that the model is ready for deployment, the next step is integrating it into the production environment. The deployment of a machine learning model in production typically involves setting up APIs for communication between the model and the application, ensuring real-time data processing, and managing the infrastructure needed for scaling.During this stage, close collaboration between data scientists, developers, and MLOps engineers is essential to ensure the successful deployment of the model.

Challenges in Deploying Machine Learning Models

Deploying a machine learning model is not without its challenges. Here are some of the most common issues encountered:

- Scalability: The infrastructure must support the increasing data loads and handle high levels of traffic without bottlenecks.

- Model Monitoring: Post-deployment, the model’s performance must be constantly monitored to detect potential drifts or declines in accuracy.

- Automation: Automating retraining processes for incoming data is essential to maintain model effectiveness over time.

Only about 13% of machine learning models make it to production because of these challenges. But by having a clear strategy for deployment and model monitoring, teams can improve success rates and maximize the model’s impact.

Best Practices for Machine Learning Model Deployment

Deploying machine learning models successfully requires careful planning and adherence to best practices. Here are some key practices to follow:

- Collaborate Early and Often: Ensure open communication between data scientists and the operations team. This collaboration fosters agility and helps streamline the machine learning model deployment process.

- Use Version Control: Track model versions and data pipelines to manage changes efficiently.

- Invest in Monitoring Tools: Set up a machine learning model monitoring dashboard to track performance in real time. This will allow you to catch issues early and optimize the model’s output.

Improving Machine Learning Models Post-Deployment

Even after successful deployment, the work doesn’t stop. You need to continually improve the machine learning model to keep up with new data, changing requirements, and potential model drifts. Continuous integration and deployment (CI/CD) pipelines are helpful in this regard, as they allow for automatic retraining and redeployment based on real-time data.

Moreover, keep an eye on model metrics and make adjustments where needed. For instance, if the accuracy starts to decline, revisit the training data or adjust the machine learning model‘s hyperparameters to get it back on track.

How to Monitor Machine Learning Models in Production

Monitoring machine learning (ML) models in production is crucial to maintaining high performance. While model development and training get significant attention, once deployed, models face real-world challenges that require ongoing monitoring and updates. As Apple engineers highlighted in their Overton system paper, maintaining and improving deployed models is often more costly than building them.

To ensure AI systems deliver business value, organizations focus on monitoring. Key aspects of this process include monitoring data and supervising data labeling.

Why is Monitoring Important in Machine Learning?

Once an ML model is deployed, its performance in real-life scenarios may differ from experimental results. As these models support business needs, they must remain stable, available, and perform at high levels, ensuring they meet specific accuracy thresholds. Failure to maintain performance can result in a significant business impact, such as revenue loss.

Challenges of Monitoring Machine Learning Models

- Performance Metrics in Real-Time: Monitoring performance in real time is difficult because:

- Model intervention: The model’s actions influence the data observed post-prediction.

- Delayed ground truth: The true outcome of predictions may not be available immediately.

- Collaboration for Monitoring: Effective model monitoring requires collaboration between different teams. Human-in-the-loop approaches are essential for tasks such as:

- Validating low-confidence predictions

- Relabeling data for model retraining

Monitoring Data to Detect Changes

Two primary factors can degrade ML model performance over time:

- Data Pipeline Issues: Data collection or processing can break due to API updates or sensor failures, leading to unexpected data inputs.

- Data Distribution Changes: Over time, real-world data evolves, making the original training data less relevant. For instance, spam detection models face new challenges as scammers adapt their tactics.

To address these issues, real-time monitoring is crucial, and unlike traditional software development, it is impossible to cover all model use cases with tests. Monitoring tools should track input data statistics such as:

- Missing values

- Univariate statistics (mean, standard deviation)

- Average word count for texts

- Brightness and contrast levels in images

Sudden changes in these metrics can signal data issues, prompting investigation and model updates.

Preventing Data Issues

To minimize risks:

- Track third-party libraries and APIs for updates that might affect your data pipeline.

- Set alerts for any unexpected changes in input data metrics, such as an increase in missing values or drastic changes in statistics.

By monitoring these aspects effectively, you can maintain high performance and ensure your models continue to meet business needs.

Final Thoughts on Machine Learning Model Deployment

Deploying a machine learning model is a complex but essential part of applying AI to solve business problems. From development and testing to monitoring and scaling, each step requires careful attention to detail. But by following this step-by-step guide, you’ll be well on your way to deploying machine learning models that perform reliably in production environments.

Keep in mind that deployment is an ongoing process, not a one-time event. Monitoring and improving the model post-deployment ensures that it continues to deliver value and remains relevant to the evolving data landscape.